Artificial intelligence is no longer an abstract concept, it is now shaping how lawyers work, how clients make decisions, and how regulators attempt to catch up with technological change. This reality was at the centre of Cleary Gottlieb’s event on AI, ethics, and regulatory futures, a session that brought together deep technical insight with reflections on what this moment means for the legal profession. Drawing on expertise in emerging technologies and internal risk advisory work, speakers Prudence and Kathryn delivered a rare blend of conceptual clarity and practical guidance, offering students and practitioners alike a candid look at the rapidly shifting responsibilities lawyers must assume in an AI-driven world.

Understanding What “AI” Really Means for Lawyers

The session began by dismantling the misconception that AI is entirely new to legal practice.

What is new is the rise of generative systems, tools capable of producing original content through deep-learning models that analyse and replicate patterns on a scale no human could match. Kathryn emphasised that although AI can serve as an extraordinarily powerful assistant, it remains exactly that: a tool. The work produced by a lawyer must still be their work, a theme that resurfaced repeatedly throughout the event.

The speakers explained that generative AI operates through three interconnected layers: the training data on which the model is built, the prompt data provided by the user, and the output data produced in response. Understanding these layers is not simply a technical curiosity; it is becoming a professional necessity. Each stage carries distinct risks, from bias to hallucination to potential misuse of confidential information. Cleary Gottlieb highlighted that law firms now sit in complex roles within the AI ecosystem, not just as users of technology, but often as advisors to clients who may themselves be developers, deployers, or heavily regulated end-users. The obligations that arise vary significantly depending on one’s role, making it essential for lawyers to identify where they sit in the AI supply chain at any given moment.

Machine Learning: Possibilities and Pitfalls

The conversation moved to machine learning, the branch of AI that allows computers to learn and adapt without explicit programming. While this enables models to imitate human reasoning patterns, it also introduces an element of opacity that raises serious challenges for legal accuracy.

As Kathryn noted, attorneys have already faced sanctions for presenting AI-generated hallucinations as legitimate case law, a reminder that the duty of professional competence includes verifying the accuracy of every citation and argument, regardless of the tool used to produce it. The presenters stressed that lawyers cannot rely on AI to handle the “complex nuances” of legal analysis, particularly where judgment, context, or ethical obligations are involved.

Copyright in the Age of Generative AI

One of the most interesting parts of the event was the presenters’ discussion of intellectual property issues arising from AI-generated works. The Getty Images v. Stability AI litigation was highlighted as a vivid example of how deeply intertwined AI and IP law have become.

Surprisingly, much of the judgment in that case is devoted not to legal doctrine but to forensic analysis of the technology itself. The speakers argued that this is emblematic of the legal challenges ahead, lawyers must now understand technological structures in order to argue legal questions meaningfully.

The global picture of copyright protection remains fragmented. The United States requires a demonstrable level of human involvement in the creation of an AI-assisted work. The United Kingdom by contrast, has long had provisions for computer-generated works but is now struggling to reconcile them with generative systems that operate in ways their drafters never anticipated. Meanwhile, China’s approach adds yet another layer of divergence. For clients particularly those developing software or generating creative content the absence of a settled international position on ownership is a real commercial risk. The speakers noted that questions as fundamental as “Do I own the output of my AI tool?” still have inconsistent answers depending on jurisdiction.

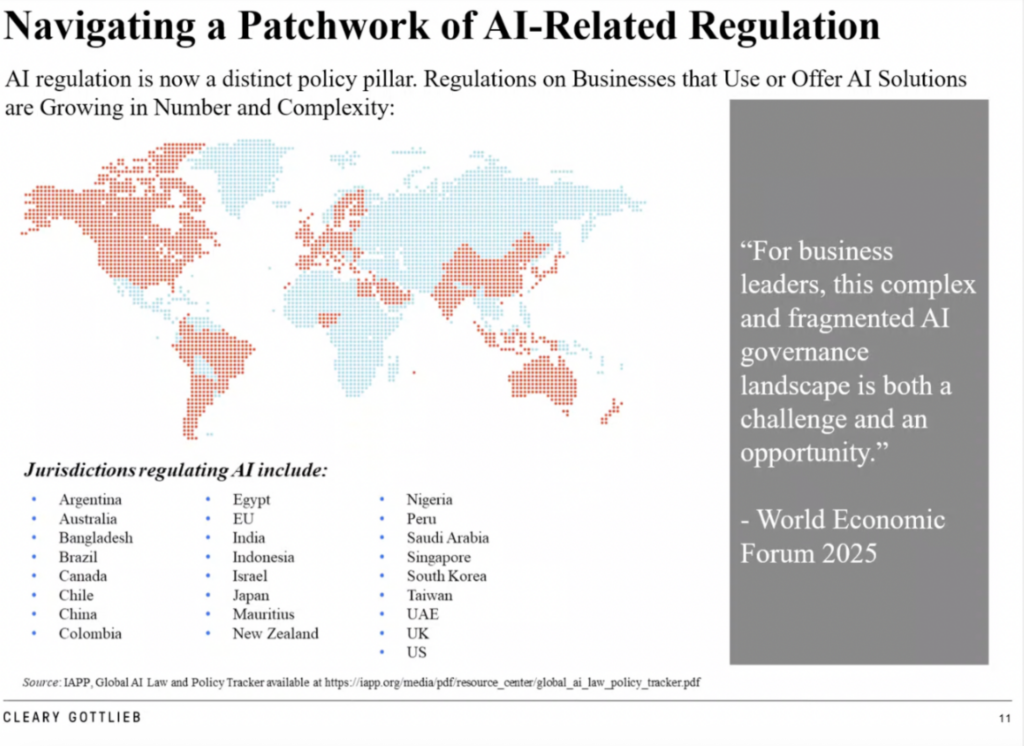

Regulating a Moving Target: The EU, the US, and the Global Patchwork

From intellectual property, the discussion transitioned to the rapidly developing regulatory landscape. The EU AI Act was presented as the most ambitious attempt to date to create a comprehensive regulatory framework, structured around a four-tiered risk system that ranges from outright bans to requirements for high-risk systems, to limited-risk transparency obligations, and finally to minimal-risk uses. Prudence emphasised that the Act applies not only to developers but to any actor in the AI supply chain, including businesses that merely use AI tools in the EU market. Its extraterritorial reach means that many companies outside the EU may nonetheless find themselves subject to compliance obligations.

The presenters stressed that the AI Act has become unusually contentious in recent months, with legislators attempting to adjust implementation timelines due to the volume of work required for compliance. Much of the regulation is principles-led, leaving businesses to interpret how transparency, oversight, and safety expectations should be operationalised. This ambiguity, while necessary in a fast-moving field, creates real compliance challenges for multinational clients.

In the United States, the regulatory landscape is even more fragmented. With no single federal AI law, individual states have taken divergent approaches. Some like Colorado, have developed comprehensive frameworks that borrow directly from EU concepts. Others, such as California, focus on foundational models and transparency obligations particularly whether consumers know they are interacting with a chatbot. For clients, this means navigating a maze of conflicting obligations that depend heavily on location, industry, and use case.

Ethical Use of AI: The Core Principles Lawyers Must Uphold

The ethical dimension of AI usage formed one of the richest parts of the event. Kathryn organised her discussion around four themes: human oversight, confidentiality, transparency, and lawful use. Each reflects traditional legal duties but gains new urgency in an AI-enabled environment.

Human oversight means ensuring that AI augments rather than replaces legal judgment. Lawyers have an obligation not only to review AI outputs but to maintain the ability to critique, contextualise, and correct them. The risk of delegating too much intellectual work to a tool that cannot reason, only predict, is substantial.

Confidentiality was presented as perhaps the most pressing ethical concern. Lawyers must avoid inputting client details into public AI tools including subscription models because these tools rarely guarantee that data will remain private. For a profession built on client trust, the possibility that confidential information could be reused as training data or accessed by third parties is unacceptable. The presenters warned that many people still underestimate how AI systems store and reuse data, making proactive data-protection measures essential.

Transparency extends to all stakeholders. Lawyers may be ethically compelled to disclose AI use to clients, especially when clients impose their own limitations on technological tools. Courts and regulators similarly expect honesty, a point shown by recent high-profile cases where attorneys submitted hallucinated judgments generated by AI. These incidents demonstrate not only professional risk but reputational harm that can extend across an entire firm.

Finally, lawful use requires lawyers to be mindful of bias embedded in training data. The EU AI Act explicitly frames discriminatory output as a compliance breach, and the presenters encouraged lawyers to understand the origins and limitations of the models they use. It is not enough to trust the surface of an output; one must understand the underlying source material and whether it reflects fact, evidence, or the unfiltered opinions of an online forum.

Building Robust AI Governance in Law Firms

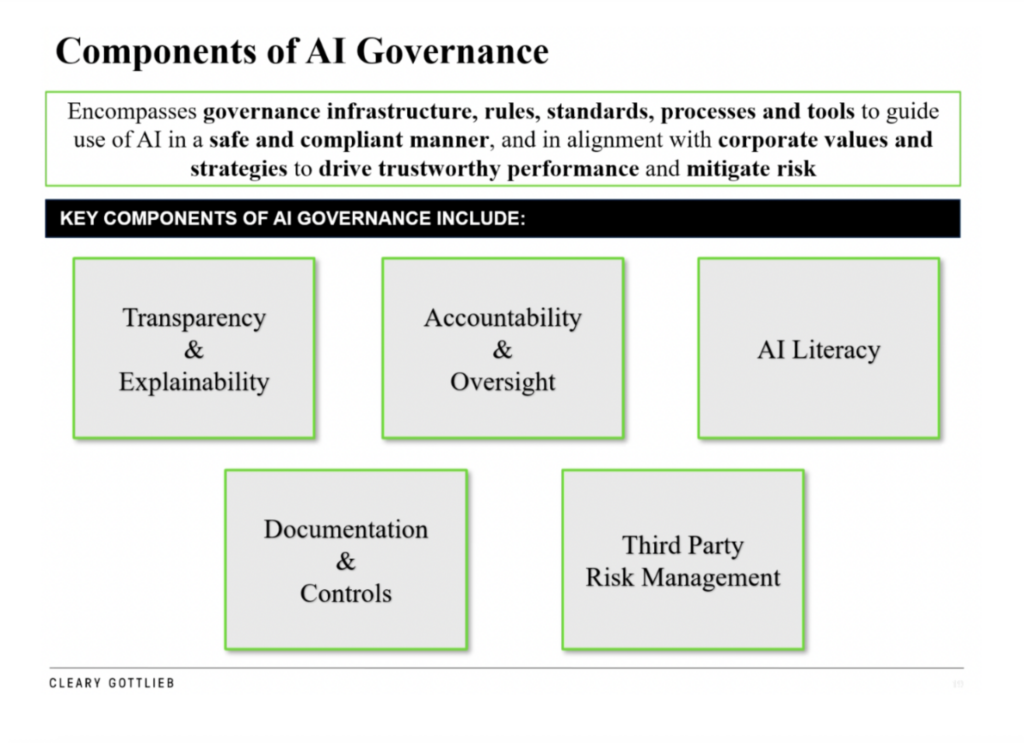

The event concluded with a discussion on AI governance, an area becoming central to modern legal practice. Cleary Gottlieb’s own approach involves a cross-functional team spanning risk, information security, enterprise applications, innovation, privacy, and contractual considerations.

The aim is to build a governance structure that integrates AI use into a firm’s existing safety and compliance architecture. This includes developing internal codes of practice, managing third-party risks, establishing documentation protocols, strengthening lawyer training, and ensuring that any use of AI aligns with corporate values and professional standards.

Governance is not merely bureaucratic. It helps build confidence among regulators, reassures clients, and supports long-term competitiveness. As the speakers noted, no developer or firm can innovate in isolation; industry-wide collaboration is becoming increasingly important, including joint codes of practice that allow firms to approach AI with consistency and shared standards.

A Profession in Transition

The Cleary Gottlieb event made one thing clear, the legal profession is entering a period of accelerated transformation, driven by tools that challenge traditional assumptions about authorship, confidentiality, accuracy, and regulatory responsibility. Yet the speakers presented AI not as a threat but as a catalyst, an opportunity for lawyers to enhance their work, provided they approach it with clarity, caution, and ethical grounding.

For students and future lawyers, the message is both informative and empowering. A new form of legal literacy is emerging, one that blends doctrinal knowledge with technological understanding and ethical sensitivity. AI will undoubtedly reshape practice, but it will not replace the lawyer who remains analytical, informed, and vigilant. Instead, it enhances the role of the modern lawyer, someone capable of navigating uncertainty, interrogating technology, and guiding clients through a regulatory landscape that grows more complex by the month. This event provided not only an overview of today’s AI challenges but a vision of the legal profession’s future.

A big thank you to Shilan for this comprehensive piece; reading it feels like we were actually there! Lots to go away and research now…Thanks also to Cleary Gottlieb for delivering this for City Law School students.

Shilan Shokohi is a Graduate Entry LLB student with a background in Criminology and Police Studies from Simon Fraser University in Canada. Her academic interests lie in corporate, finance, and contract law, and she is particularly interested in how legal systems shape the ways businesses and individuals interact.

She says that “…moving to London has given me a new appreciation for how deeply the law influences daily life, from commercial transactions to social structures, and has strengthened my commitment to exploring law in both its theoretical and practical dimensions.”